Introduction

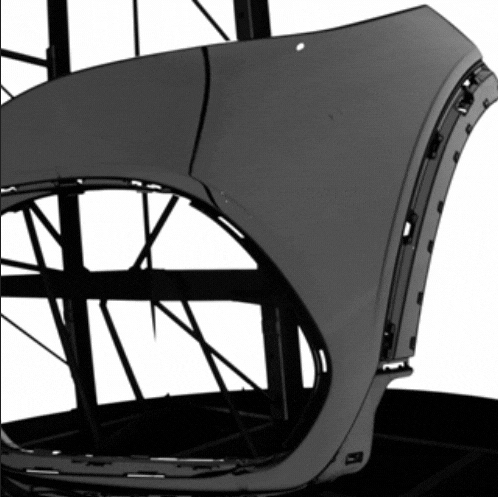

In previous articles, we explored classification and object detection, two fundamental pillars of computer vision based on deep learning. Classification allows us to identify the type of object in an image, such as distinguishing between different components or types of parts. Detection not only identifies these objects but also specifies their location within the image by drawing bounding boxes around them. Now, we take a step further toward a technology that not only identifies and locates but also precisely outlines the edges of objects: segmentation.

Segmentation process

Segmentation is a process that divides an image into several regions, assigning a specific class to each one. Unlike object detection, which uses bounding boxes to locate elements, segmentation identifies the exact shapes and outlines areas in detail. In a quality inspection process, segmentation makes it possible to accurately measure a defect that runs diagonally, avoiding oversized bounding boxes that make analysis difficult. This technique can also be used to remove irrelevant elements. For example, it can eliminate noisy backgrounds containing elements similar to the defects we actually want to analyze, enabling more precise object detection with fewer false positives.

Types of segmentation

There are two main types of segmentation: semantic segmentation and instance segmentation. Semantic segmentation identifies and classifies all areas that share the same characteristics, such as removing the background to focus on relevant parts. Instance segmentation, on the other hand, distinguishes individual objects within the same class, allowing for precise measurement of specific areas such as scratches, rubber seals, wiring, or welds.

What are the approaches to training segmentation models?

There are two main approaches. On one hand, custom models are trained with data specifically labeled for a particular use case. This approach ensures very precise results by adapting to the specific characteristics of each environment. On the other hand, foundational models, previously trained with generic data, allow objects to be segmented automatically without additional training. These models are useful for tasks like separating relevant components from complex backgrounds without further adjustments. Foundational models are ideal when manual labeling is not feasible or specific data is limited.

Today, thanks to new models and advances in hardware, it is possible to perform complete segmentation on high-resolution images in real time. This represents a significant leap forward for quality control processes in the automotive industry.

Conclusion

To sum up, segmentation is a powerful tool that complements classification and object detection, providing a level of detail that enables more precise analysis for complex tasks. At Eines Vision Systems, we leverage state-of-the-art models and advanced tools for both labeling and prediction, ensuring robust and efficient solutions. In the next article, we will explore anomaly detection, a key tool for identifying unexpected defects and ensuring quality in manufacturing processes when there are not enough examples of incorrect parts or defects.